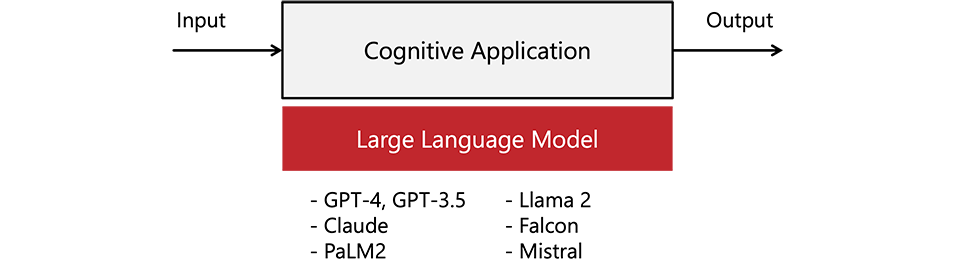

Definition

We define cognitive applications as software programs that transform inputs into outputs with the help of large language models.

ChatGPT, Bard, Amazon Q, and other chatbots are good examples of cognitive applications. Chatbots transform user requests into responses generated by large language models.

Chatbots are instances of interactive cognitive applications, but our definition is much broader and includes software programs that do not require user input.

Our definition is also much broader than that of an intelligent agent.

An agent is a type of cognitive application. At the same time, a cognitive application does not have to have agency, such as the ability to sense the environment or make decisions autonomously.

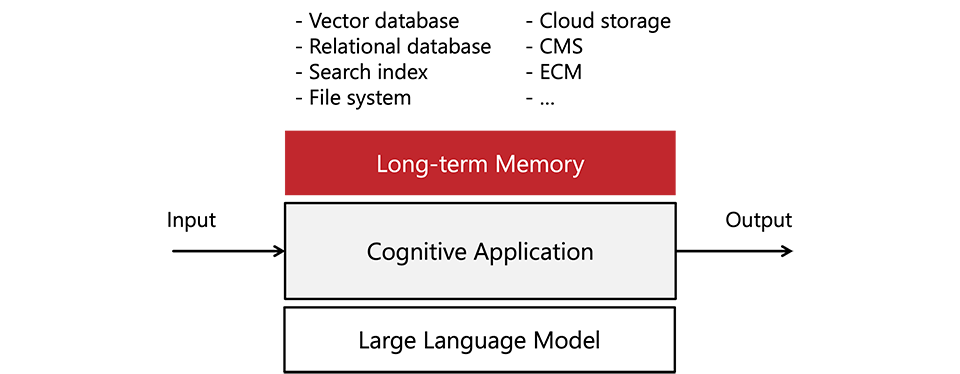

Memory

AI models used by cognitive applications are static. They are frozen at the time of their training.

Fortunately, large models can learn on the fly by extracting new information from the context of a request. This near-magical emergent quality is called in-context learning.

For example, with in-context learning, when provided with the latest market news, a static general-purpose AI model can accurately assess current market risks.

By taking advantage of in-context learning, cognitive applications can be fully current and dynamic despite relying on static and frozen AI models.

This can be achieved with the help of a memory module.

When presented with a new input, the application can bundle it with the matching information in its memory and submit the bundle to the AI model for processing.

The process is known as retrieval-augmented generation (RAG).

The memory module of a cognitive application can leverage a range of underlying technologies: vector databases, relational databases, search indices, file systems, cloud storage, and others.

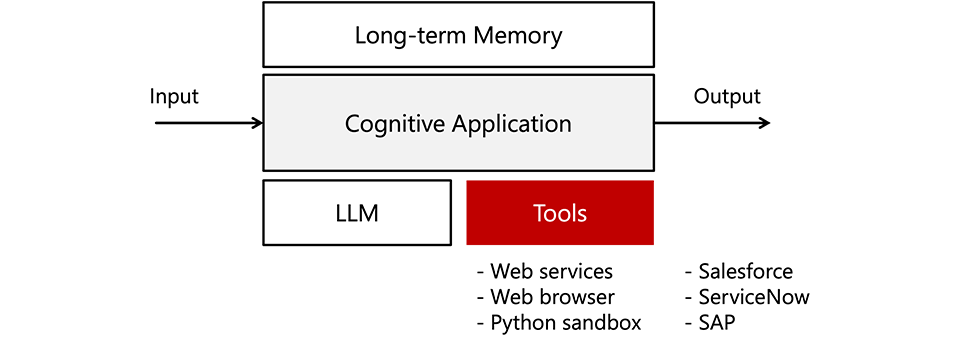

Tools

Large AI models can be amazingly good at some tasks and yet completely fail at others.

For instance, due to how they break words into tokens for training, some language models are not good with numbers and mathematical expressions.

Fortunately, to compensate for their weaknesses, AI models can be trained to use tools: calculators, programming languages, web browsers, and others. They can also be trained to use the APIs of enterprise applications such as Salesforce, ServiceNow, and SAP.

To take advantage of this ability, a cognitive application must maintain a catalog of tools that can be used by the models. Each tool in the catalog must have a detailed description and a set of examples.

When passing an input to a model, the cognitive application can provide the list of relevant tools as part of the request. Models cannot use the tools directly. They must ask the app to use a tool on their behalf. The app can use this redirection step to enforce security, alignment, and compliance.

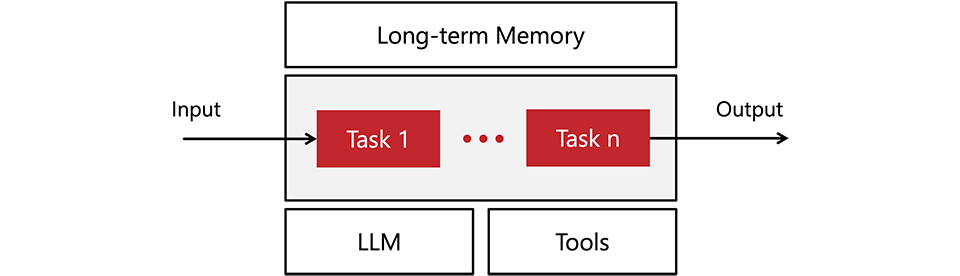

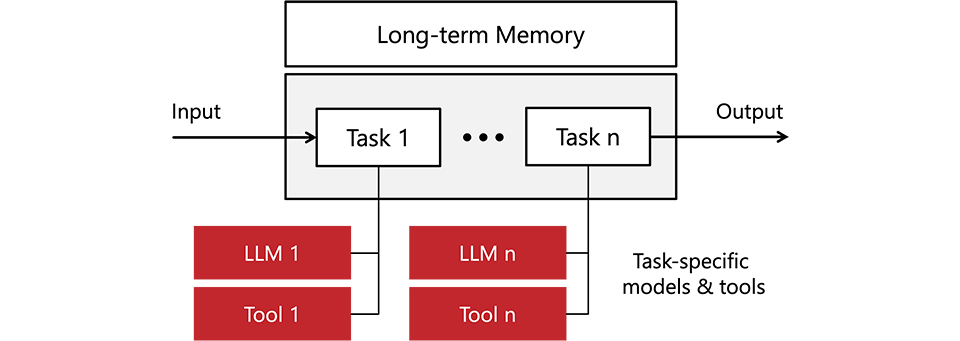

Tasks and Subtasks

Cognitive applications must often perform complex operations to convert their inputs into outputs.

For example, turning a product specification into working software takes many steps and procedures. The same is true for processes in investment management, customer support, and sales engagement.

As illustrated by intelligent agents, large language models can plan and act step-by-step to accomplish a goal. Unfortunately, they are not very consistent in this ability.

For predictable results, the cognitive application must be responsible for breaking tasks into subtasks that can be reliably and consistently performed by the models.

There are many other reasons for task decomposition and orchestration in the application layer, such as performance, security, and explainability.

In healthcare, for instance, it is vitally important to follow a treatment protocol precisely, which is not possible when steps are autogenerated by a model.

Task-specific Models and Tools

Large AI models are general-purpose. GPT-4, for instance, can diagnose medical problems and pass proficiency exams better than most humans.

This quality comes at a cost. It can take tens of millions of dollars to complete a single training run of a large model. Running an inference on such a model requires significant time and resources.

In many cases, using a general-purpose model is overkill.

For example, a much smaller task-specific model may do when the objective is to process a natural language request to retrieve data from a database.

Consequently, to achieve maximum efficiency and performance, a cognitive application must use the right tools and models for each task in the flow.

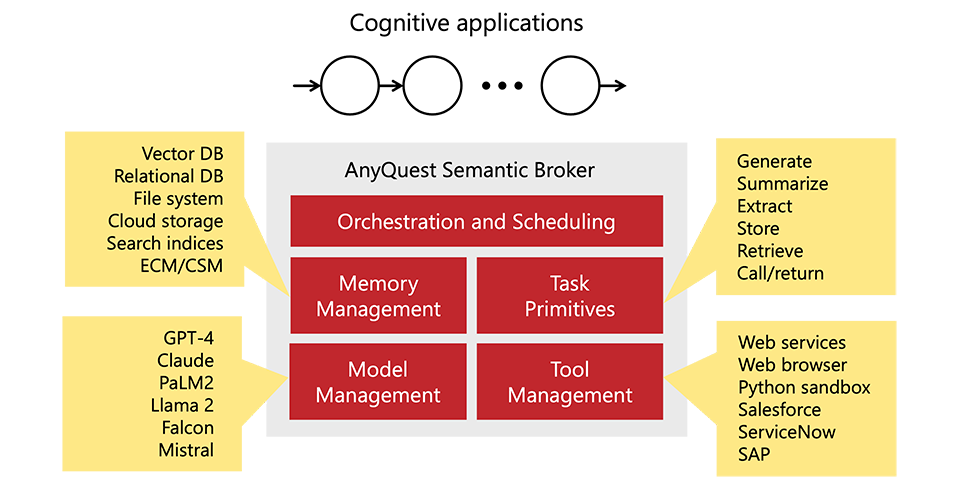

Semantic Brokers

With memory, tasks, task-specific models, and tools, we reach the level of complexity that necessitates the introduction of a dedicated platform for cognitive applications.

To accelerate the development and deployment of such applications, in accordance with good software engineering practices, shared services must be refactored into a separate platform layer.

We call this layer a semantic broker, a platform for cognitive applications.

A semantic broker can be packaged as a library and linked to a cognitive application. It can also be provided as a cloud service and used remotely by any number of such applications.

Conclusion

Cognitive applications are the next step in improving productivity across many industries. Using semantic brokers, we can quickly augment large language models with the necessary memory, tools, and task flows to efficiently build and iterate on cognitive applications that will help us work faster and smarter.