For the first time in human history, AI can be provided and consumed as a service, creating many opportunities for using AI in the enterprise.

Specifically, companies can now use AI without a significant upfront investment previously required to clean data, engineer features, train, deploy, and monitor models.

Unfortunately, this also presents a set of new challenges, especially in the areas of security, compliance, and control over proprietary data.

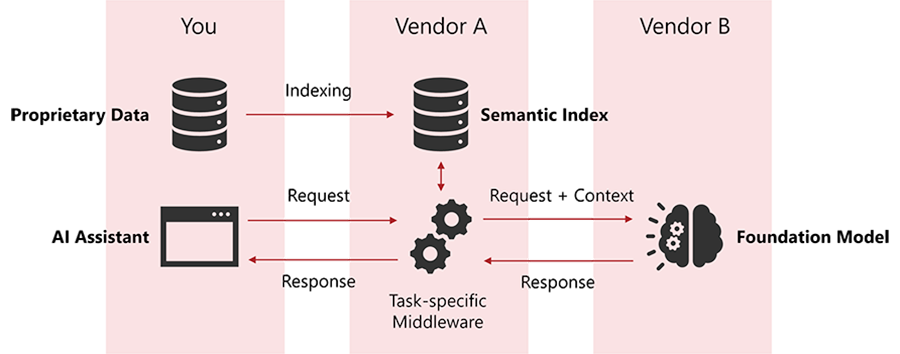

To see why, consider a high-level diagram of a typical generative AI assistant presented below:

There are three parties involved in this solution:

- You – the company using the assistant.

- Vendor A – the vendor providing the AI assistant.

- Vendor B – the vendor providing the large foundation model.

Vendor A hosts a semantic index for the proprietary data owned by the company. The index is used to enrich user requests with relevant data before they are forwarded to the large foundation model. Without proprietary data, the model’s responses would be useless: too generic and outdated.

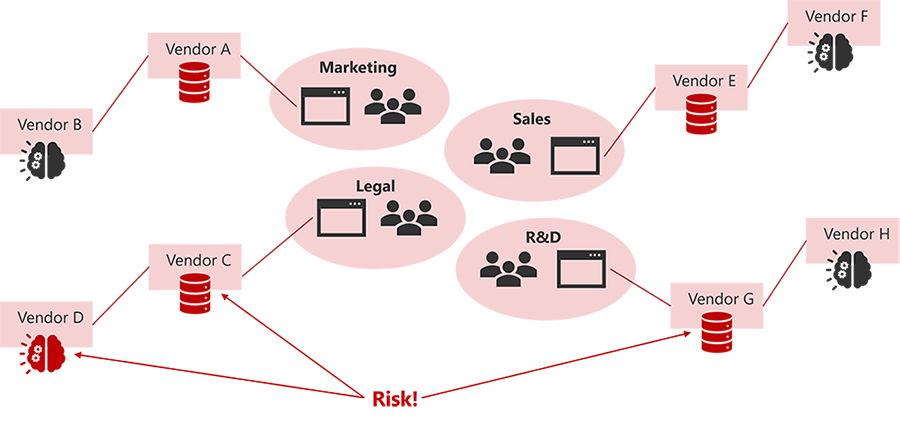

Consider now a company where generative AI assistants are adopted bottom-up in a grass-roots fashion.

As a result of this process, the company’s proprietary data is replicated across multiple semantic indices maintained by different vendors. Each index contains a copy of the data and presents a risk of a data leak.

In addition, the company has no control over which foundation model providers are used by the assistants. Some of them may be tempted to use the company’s proprietary data to improve their models, yet another risk of a data leak.

Fortunately, we are familiar with this type of challenges and know how to handle them.

In the past, enterprises had similar concerns about cloud services, APIs, and mobile apps. Some of us remember carrying two mobile phones: a Blackberry issued by the company and an iPhone for personal use.

In all cases, the problem was solved by applying the platform/application pattern:

- Access to third-party services is performed via a centrally managed platform that enforces security and regulatory compliance.

- Applications use the APIs provided by the platform.

- The platform provides low-code tools that enable citizen developers to create new solutions.

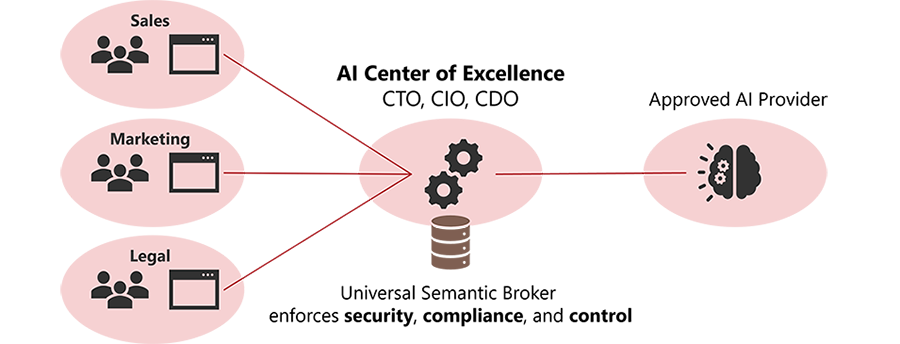

The same approach can be used to address the security and compliance challenges presented by generative AI solutions.

In particular, the company can deploy a semantic broker that can support any number of generative AI assistants and agents used by the various organizational units.

The semantic broker can be centrally managed by a Generative AI Center of Excellence, which can also be responsible for establishing best practices, processes, solution patterns, and personnel training, all the vital components of the business AI Quotient (AQ).